About Me

Hi! I’m Dayoon Ko 😊, a Ph.D. candidate in Computer Science and Engineering at Seoul National University, advised by Prof. Gunhee Kim.

I’m broadly interested in how large language models can keep up with a world where information and media change very quickly. Lately, I’ve been working on three kinds of problems:

Outside of research, I enjoy dancing 🎶 or doing CrossFit 🏋🏻♀️. Staying active keeps my brain happy!

I’m broadly interested in how large language models can keep up with a world where information and media change very quickly. Lately, I’ve been working on three kinds of problems:

- How to help search agents scale their reasoning and retrieval abilities

- How to help LLMs and RAG systems stay updated as facts or entities shift

- How to help models make sense of messy, real-world multimodal data

Outside of research, I enjoy dancing 🎶 or doing CrossFit 🏋🏻♀️. Staying active keeps my brain happy!

🔥 Recent News

[Jan 2026] Our paper "Hybrid Deep Searcher", completed during my internship at LG AI Research, has been accepted at ICLR 2026! 🎉

[March 2025] Started research internship at LG AI Research, Superintelligence Lab!

Selected Publications

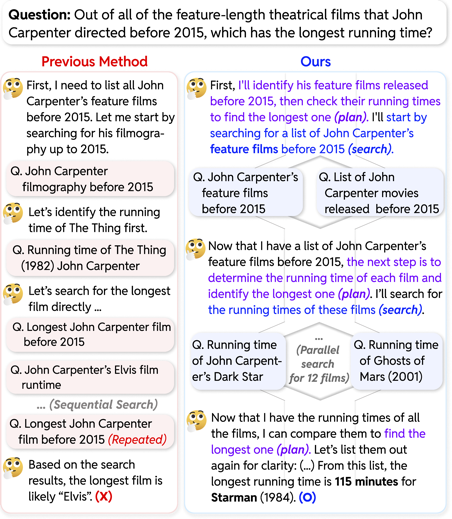

Hybrid Deep Searcher: Scalable Parallel and Sequential Search Reasoning

ICLR 2026

A scalable search agent that dynamically integrates parallel and sequential search strategies for multi-hop QA with RAG. We introduce the HDS-QA training dataset and achieve significant improvements.

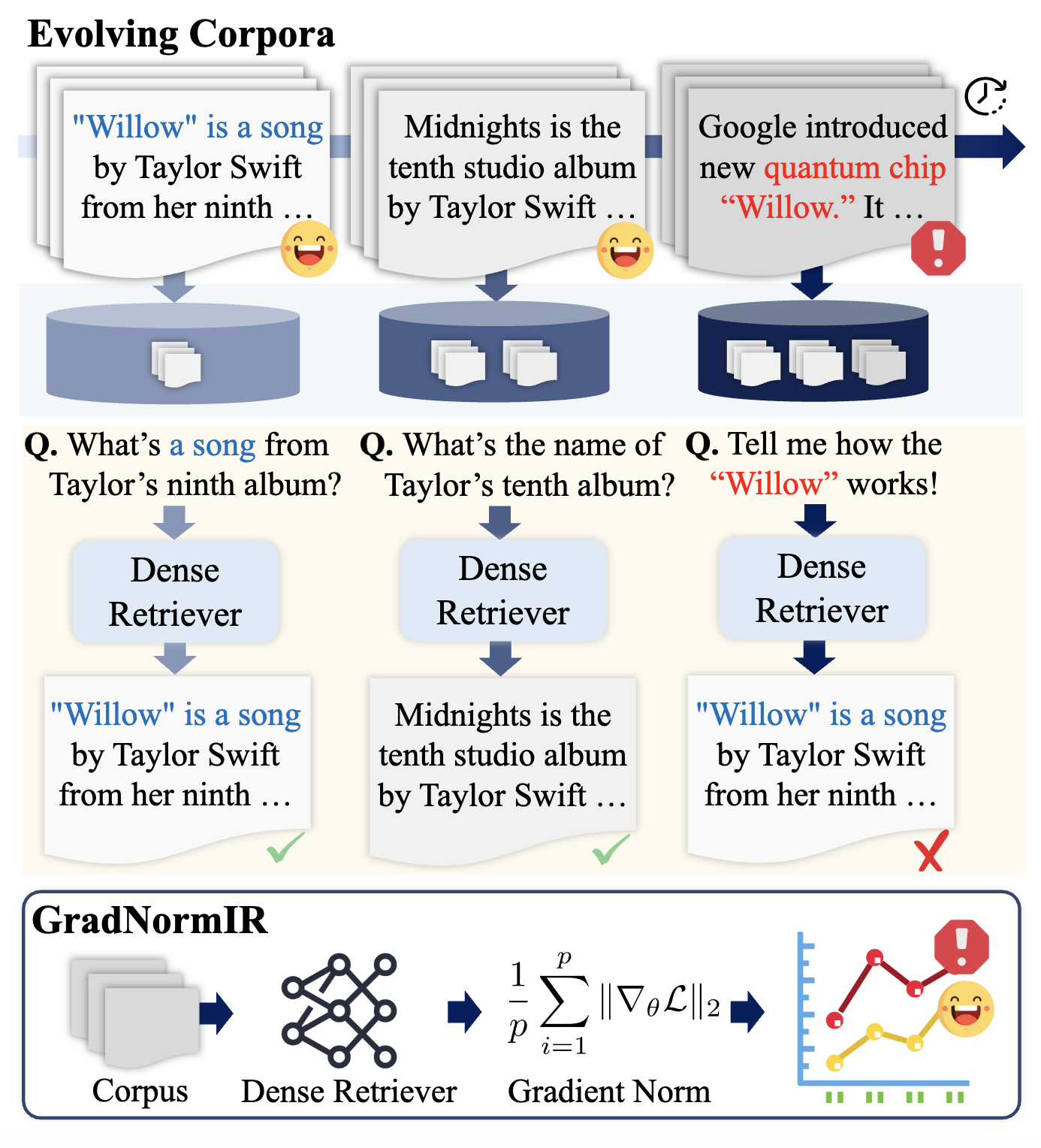

When Should Dense Retrievers Be Updated in Evolving Corpora? Detecting Out-of-Distribution Corpora Using GradNormIR

ACL 2025 Findings

We propose GradNormIR, an unsupervised method that detects out-of-distribution shifts in document collections using gradient norms, enabling timely updates of dense retrievers without manual intervention.

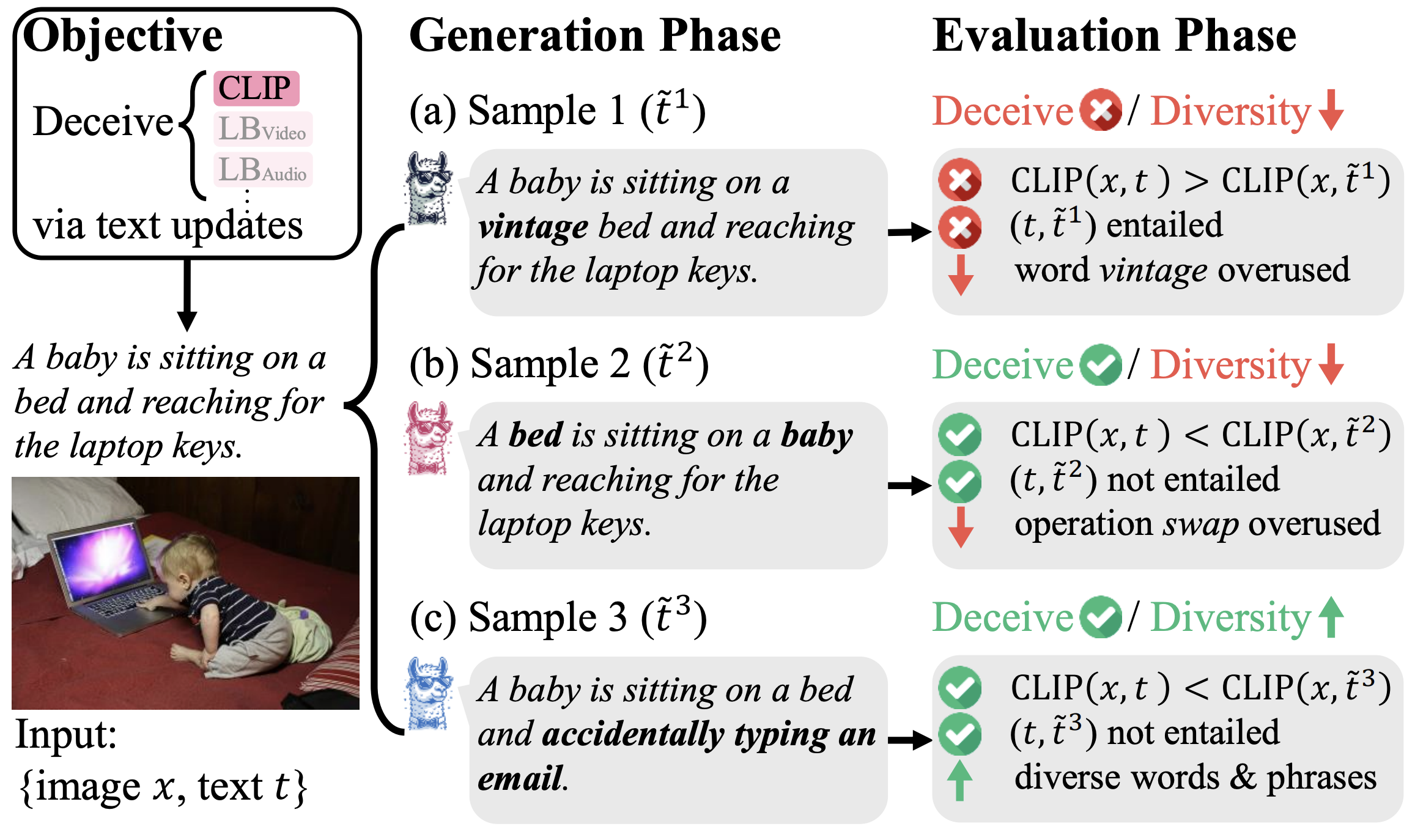

Can LLMs Deceive CLIP? Benchmarking Adversarial Compositionality of Pre-trained Multimodal Representation via Text Updates

ACL 2025

We introduce MAC benchmark for evaluating the robustness of pre-trained multimodal models against adversarial text updates, revealing vulnerabilities in vision-language models like CLIP.

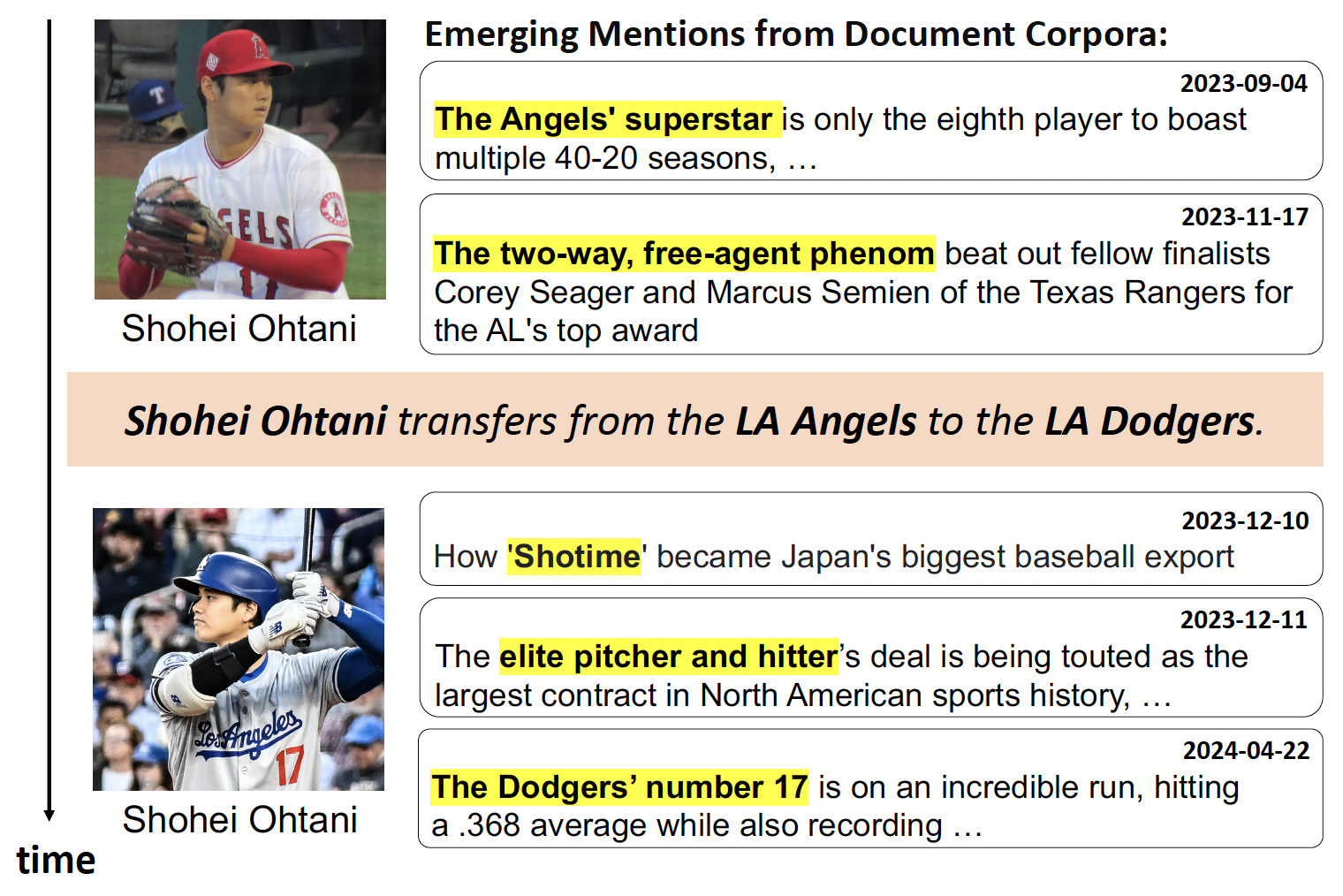

GrowOVER: How Can LLMs Adapt to Growing Real-World Knowledge?

ACL 2024

We propose QA & dialogue benchmarks that are continuously and automatically updated to assess whether LLMs can handle evolving knowledge. By making LLMs evaluate their confidence, we enable RAG systems to adapt to new knowledge without retraining.

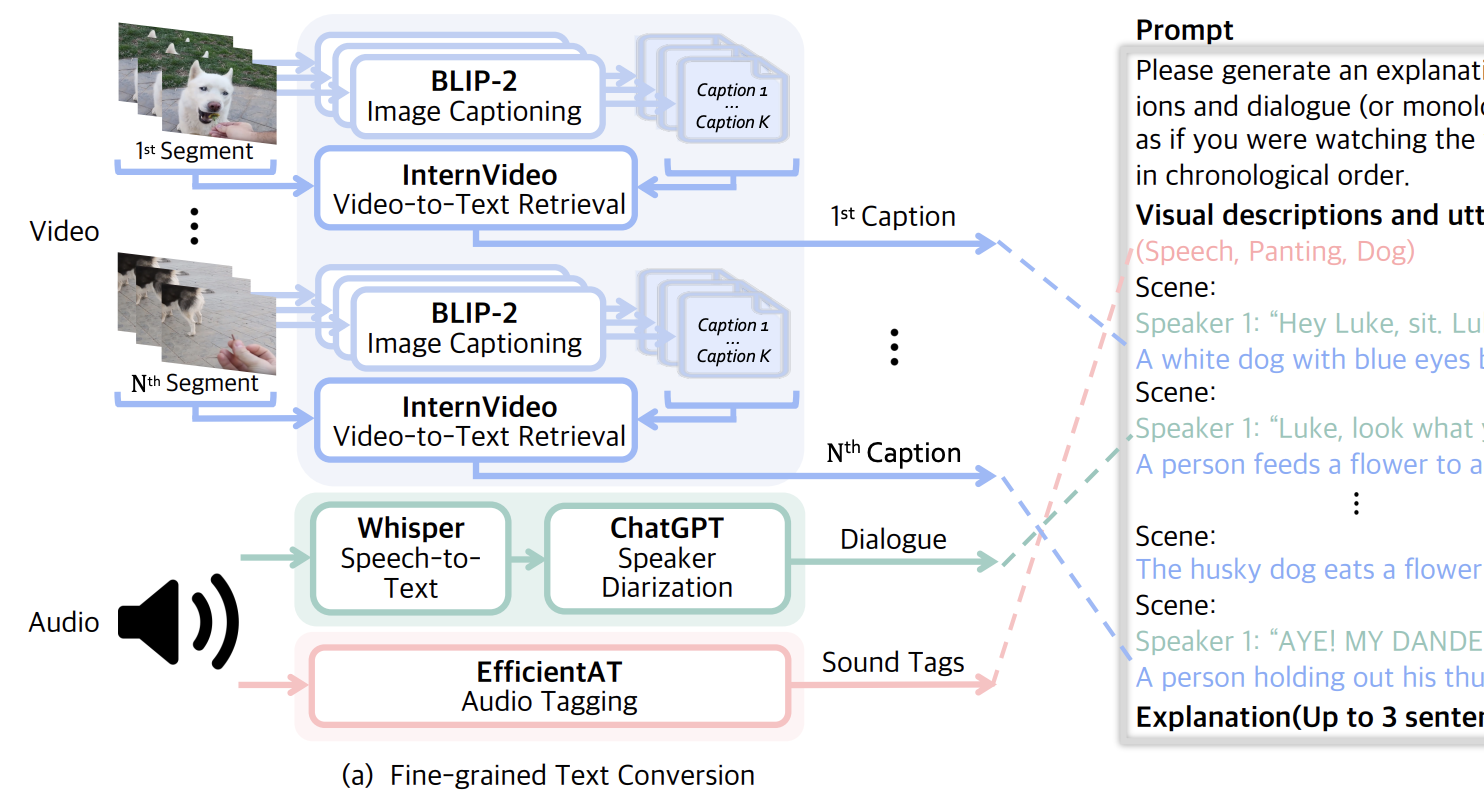

Can Language Models Laugh at YouTube Short-form Videos?

EMNLP 2023

A video humor explanation benchmark via a multimodal-filtering pipeline to evaluate LLMs' understanding of complex multimodal tasks like humor. We generate several frame captions and filter them based on video segments to enhance LLMs with vision capabilities.

Experiences

Research Intern

LG AI Research, Superintelligence Lab

Working on advanced reasoning and retrieval systems for large language models

March 2025 - Present

Education

M.S./Ph.D. in Computer Science and Engineering

Seoul National University

Advisor: Professor Gunhee Kim

September 2022 - Present

B.S. in Computer Science and Engineering

Yonsei University

GPA: 4.12/4.30 (Rank: 1/28)

March 2018 - August 2022